Windows 10 Support Ending Soon

Good engineers are figuring out more energy/compute efficient ways to train models all the time. Part of the original deepseek hype was that they not only cooked a competitive model but did it with the fraction of energy/compute needed by their competion. On the local hosting side computer hardware isalso getting more energy efficient over time not only do graphics cards improve in speed but also they slowly reduce the amount of power needed for the compute.

AI is a waste of energy

It depends on where that energy is coming from, how that energy is used, and the bias of the person judging its usage. When the energy comes from renewable resources without burning more emmisions into the air, and computation used actually results in useful work being done to improve peoples daily lives I argue its worth the watt hours. Espcially in local context with devices that take less power than a kitchen appliance for inferencing.

Greedy programmer type tech bros without a shred of respect for human creativity bragging about models taking away artist jobs couldn't create something with the purpose of helping anyone but themselves if their life depended on it. But society does run on software stacks and databases they create, so it can be argued llms spitting out functioning code and acting as local stack exchange is useful enough but that also gives birth to vibe coders who overly rely without being able to think for themselves.

Besides the loudmouth silicon valley inhabitors though, Theres real work being done in private sectors you and I probably dont know about.

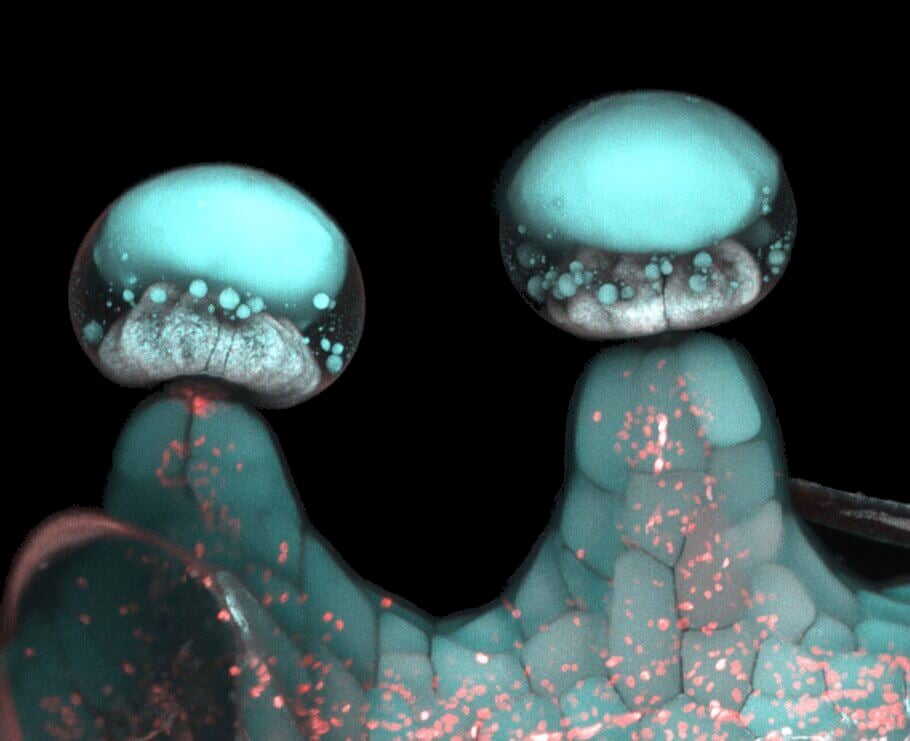

My local college is researching the use of vision/image based models to examine billions of cancer cells to potentially identify new subtle patterns for screening. Is cancer research a waste of energy?

I would one day like to prototype a way to make smart glasses useful for blind people by having a multimodal model look through the camera for them and transmit a description of what it sees through braille vibration pulses. Is prototyping accessibility tools for the disabled a waste of energy?

trying to downplay this cancer on society is dangerous

"Cancer on society" is hyperbole that reveals youre coming at us from a place of emotional antagonism. Its a tool, one with great potential if its used right. That responsibility is on us to make sure it gets used right. Right now its an expensive tool to create which is the biggest problem but

- Once its trained/ created it can be copied and shared indefinitely potentially for many thousands of years on the right mediums or with tradition.

- Trsining methods will improve efficiency wise through improvements to computational strategy or better materials used.

- As far as using and hosting the tool on the local level the same power draw as whatever device you use from a phone to a gaming desktop.

In a slightly better timeline where people cared more about helping eachother than growing their own wealth and american mega corporations were held at least a little accountable by real government oversight then companies like meta/openAI would have gotten a real handslap for stealing copyright infringed data to train the original models and the tech bros would be interested in making real tools to help people in an energy efficient way.

ai hit a wall

Yes and no. Increasing parameter size past the current biggest models seems to not have big benchmark omprovements though there may be more subtle improvements in abilities not caputured with the test.

The only really guilty of throwing energy and parameters at the wall hoping something would stick is meta with the latest llama4 release. Everyone else has sidestepped this by improving models with better fine tuning datasets, baking in chain of thought reasoning, multi modality (vision, hearing, text all in one). Theres still so many improvements being made in other ways even if just throwing parameters eventual peters out like a Moore's law.

The world burned long before AI and even computers, and it will continue to burn long after. Most people are excessive, selfish, and wasteful by nature. Islands of trash in the ocean, the ozone layer being nearly destroyed for refigerantd and hair sprays, the icecaps melting, god knows how many tons of oil burned on cars or spilled in the oceans.

Political environmentalist have done the math on just how much carbon, water, and materials were spent on every process born since the industrial revolutions. Spoilers, none of the numbers are good. Model training is just the latest thing to grasp onto for these kinds of people to play blame games with.

Thanks for the suggestion and sharing your take :)

the 'okay...' 'hold on,' 'wait...' let me read over it again...' is part of deepseeks particular reasoning patterns, I think the aha moments are more of an emergent expression that it sometimes does for whatever reason than part of an intended step of the reasoning pattern but I could be mistaken. I find that lower parameter models will suffer from not being able to follow its own reasoning patterns and quickly confuses itself into wrong answers/hallucinations. Its a shame because 8B models are fast and able to fit on a lot of GPUS but they just cant handle keeping track of all the reasoning across many thousands of tokens.

The best luck ive had was with bigger models trained on the reasoning patterns that can also switch them on and off by toggling the

<think>

tag. My go to model the past two months has been deephermes 22b which is toggleable deepseek reasoning baked into mistral 3 small, its able to properly bake answers to tough logical questions putting its reasoning together properly after thinking for many many tokens. Its not perfect but it gets things right more than it gets them wrong which is a huge step up.Heres the full song parody from futurama on youtube

My favorite reference for what youve just described is 3blue1browns Binary, Hanoi, and sierpinski which is both fascinating and super acessable for the average non-nerd.

The pressing point is that this recursive method of counting isn't just a good way of doing it, but basically the most efficient way that it can be done. There are no simpler or more efficient ways of counting.

This allows the same 'steps' to show up in other unexpected areas that ask questions about the most efficient process to do a thing. This allows you to map the same binary counting pattern across both infinite paths of fractal geometry with sierpinskis arrowhead curve and solve logic problems like towers of Hanoi stacking. Its wild to think that on some abstract level these are all more or less equal processes.

That would require being able to grow a somewhat light textile plant such as linen or cotton or jute. If Canadian growing seasons are anything like I imagine they are, that idea is more or less a nonstarter because all those need a warmer zone climate enviroment. So you're left with the dense heavy textile that comes from shearing farm animal wools for clothing making. In modern times you can theoretically grow textile indica hemp with cold resistance and short growing cycles, then process it into a softer and somewhat light clothing through making yarn but that may not br part of native indigenous Canadian culture.

I'm like 60% sure during the height of subway in mid-2000s-early 2010s the subway commercials actually did advertise the foot long as being 12 inch sub's for 5$ (goddamn the 5$ foot long ads were catchy). However unlike real measurements that have defined standards, as subway enshitified they weren't forced to change naming as they slowly shrank the sandwiches. Of course this is long enough ago that an entire generation doesnt know who Jared was so its okay to assume it was never a real foot long.

Wow! This was an awesome write up! Thanks for your contribution Mechanize :)

I volunteer as developer for a decade old open source project. A sizable amount of my contribution is just cooking up decent documentation or re-writting old doc from the original module authors written close to a decade ago because it failed me information wise when I needed it. Programmers as it turns out are very 'eh, the code should explain itself to anyone with enough brains to look at it' type of people so lost in the sauce of being hyperfluent tech nerds instantly understanding all variables, functions, parameters, and syntax at very first glance at source code, that they forgot the need for re-translation into regular human speak for people of varying intelligence/skill levels who can barely navigate the command line.

The real answer is that the techie nerds willing to learn git and contribute to open source projects are likely to be hobbyist programmers cutting their teeth on bugfixes/minor feature enhancements and not professional programmer-designers with an eye for UI and the ability to make it/talk with those who can. Also in open source projects its expected that the contributor be able to pull their own weight with getting shit done so you need to both know how to write your own code and learn how to work with specific UI formspecs. Delegating to other people is frowned upon because its all free voulenteer work so whatever you delegate ends up eating up someone elses free time and energy fixing up your pr.

We recently got an airfryer. it helps cut down prep time and ease of cleaning to the point I don't get upset about it anymore. Would highly recommend if you haven't got one already

No worries I have my achthually... Moments as well. Though here's a counter perspective. The bytes have to be pulled out of abstraction space and actually mapped to a physical medium capable of storing huge amounts of informational states like a hard drive. It takes genius STEM engineer level human cognition and lots of compute power to create a dataset like WA. This makes the physical devices housing the database unique almost one of a kind objects with immense potential value from a business and consumer use. How much would a wealthy competing business owner pay for a drive containing such lucrative trade secrets assuming its not leaked? Probably more than a comparative weighed brick of gold, but that's just fun speculation.

You shouldn't be sorry, you didn't do anything wrong content wise. If anything you helped the community by sparking a important conversation leading to better defined guidelines which I imagine will be updated if this becomes a common enough issue.

I enjoyed the meme LadyButterfly. the Lemmy AI hate train has gone full circle-jerk meltdown with this one, sorry it happened to you. Thanks for the laugh.

Thank you for the explanation! I never really watched the Olympics enough to see them firing guns. I would think all that high tech equipment counts as performance enhancement stuff which goes against the spirit of peak human based skill but maybe sports people who actually watch and run the Olympics think differently about external augmentations in some cases.

Its really funny with the context of some dude just chilling and vibing while casually firing off world record level shots

If they added one more x onto maxx and bought some cheap property rights they could have done some serious rebranding

Who are these people?

The people who voulenteer in the irc chats of the Linux mint help I talked with were so chill and helpful