Stop using a browser that violates user freedom and privacy!

How to calculate cost-per-tokens output of local model compared to enterprise model API access

Whats a better name for 'graphics cards' that describes the kind of computational work it does

MistralAI releases Magistral, their first official reasoning models. magistral small 2506 released under apache 2.0 license!

Got any security advice for setting up a locally hosted website/external service?

Got any security advice for setting up a locally hosted website/external service?

DeepSeek just released updated r1 models with 'deeper and more complex reasoning patterns'. Includes a r1 distilled qwen3 8b model boasting "10% improved performance" over original

Advice for picking a PSU for server class GPUs? Also a question about adapter cable

Using local model with basic RAG to help reference rules when playing table top game

Will the motherboard in my decade old desktop pc work with any new graphics card?

Has your local thinking model had an 'Aha!' moment similar to the one in Deepeek R1 papers?

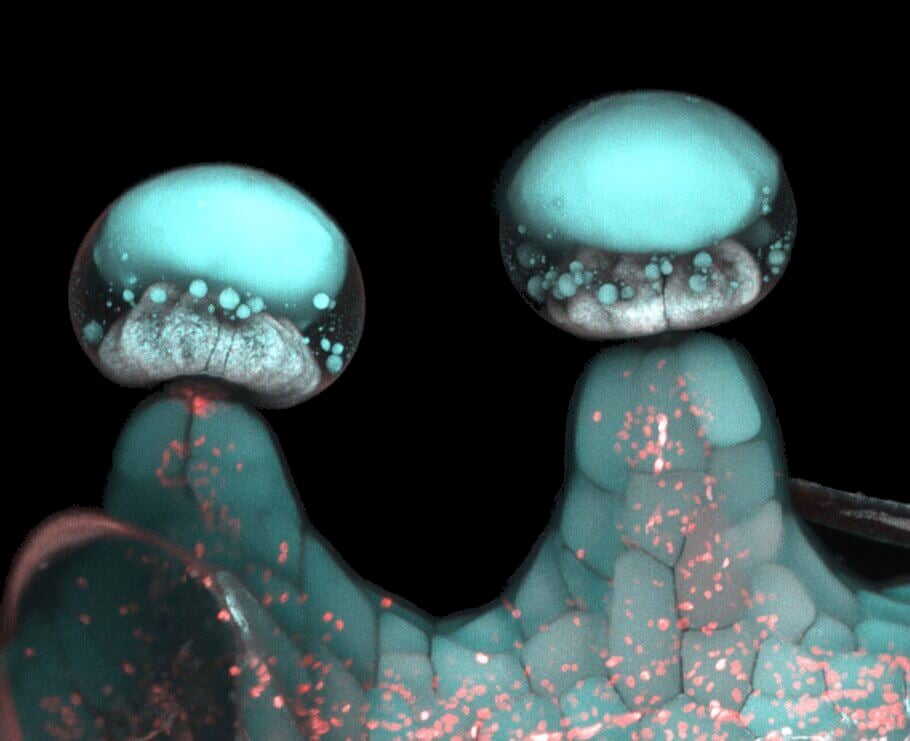

Anthropic's 'On the Biology of a LLM' got a massive update: Features fascinating deep dives into how models process information behind the scenes

NousResearch is quietly cooking some fascinating stuff presumably for full release of DeepHermes!

Better watch out when those windows-fanboy silicon lifeforms start talking shit on my favorite operating system family.

Your welcome! Its good to have a local DRM free backup of your favorite channels especially the ones you come back to for sleep aid or comfort when stressed. I would download them sooner than later while yt-dlp still works (googles been ramping up its war on adblockers and 3rd party frontends). BTW yt-dlp also works with bandcamp and soundcloud to extract audio :)